Machine Learning and VR Are Driving Prosthetics Research

Researchers in North Carolina and Arizona are revealing new technologies and techniques to make prosthetic fitting more convenient for both patients and clinicians.

September 3, 2019

Fitting a patient for a prosthetic limb is normally a painstaking and time-consuming process. In some cases, trying to determine how capable a patient may be of operating a prosthetic limb even before fitting one has also been a problem.

However, using virtual reality and reinforcement learning, researchers in North Carolina and Arizona are revealing new technologies and techniques to make prosthetic fitting more convenient for both patients and clinicians: In Charlotte, surgeons at OrthoCarolina used VR to demonstrate that patients born without hands had inborn abilities to control prosthetic hands without prerequisite targeted muscle re-innervation surgery (as often required by traumatic amputee patients). In Raleigh, Chapel Hill, and Tempe,AZ., engineering professors demonstrated a tuning algorithm based on reinforcement learning could reduce the time needed to fit a robotic knee from hours to about 10 minutes.

The researchers say the breakthroughs indicate a new era of convenience and optimism may be in the offing for amputees.

"It's a very small population," OrthoCarolina hand surgeon Michael Gart, MD, said of the work he did with colleagues Bryan Loeffler, MD, and Glenn Gaston, MD Gart estimated the congenital amputee clinic at OrthoCarolina serves a population catchment area of about one million people and had only seven to nine patients who fit the criteria for the VR study.

"Thankfully, most or all were willing and excited to participate in the study," Gart said. "It was really exciting watching the first few kids go through it. Their faces lit up when they saw they could control a hand on the screen using a limb that never had a hand."

Patients who took part in the research underwent testing on a digitized virtual reality unit, the COAPT Complete Control Room, to measure the performance of both their regularly developed arm as well as the side missing a hand. As patients focused on thinking about moving their hands or moving the limb in the place where a hand would be, the system tracked multiple degrees of freedom, or the ability to move the hands in different positions in space. Sensors placed along the forearms to detect muscle activity determined how accurately each limb could reproduce prosthetic hand movements.

"I think it would have been difficult to do it objectively, to have a measure of how proficient one is with use, without the VR," Gart said. "It could have in theory been done, but it would have been a sort of subjective interpretation, whereas, with the VR you can position a virtual limb exactly how you want it in space, and only when they exactly match it is it counted as a completion. It certainly helps to make it worthy of study and publication."

Gart and his colleagues will present the study's findings at the annual meeting of the American Society for Surgery of the Hand, to be held Sept. 5 to 7.

He said the principles behind his group's work and that of the NCSU/UNC/ASU team were similar.

"The idea of machine learning to help fine-tune control is a shared theme," he said. "The difference in the lower extremity is they are trying to fine-tune things like where the residual limb is putting pressure on the prosthetic, and how to control gait and stiffness at the joint to match the unaffected limb, whereas the pattern recognition strategies we use are doing a similar thing through a different method."

Patient and clinician convenience was the prevailing idea behind the NCSU/UNC/ASU project, Helen Huang, a professor in the Joint Department of Biomedical Engineering at N.C. State and UNC, said. Prior to her team tackling the issue, there was no stable model for giving machines more of a role in tuning robotic knees.

"My question was how do we generate some smart machine?" Huang said. "Not something that will completely eliminate human tuning, but could help expedite it. A human expert can only adjust one parameter at a time. The machine can learn and adjust multiple parameters at once and speed up the process."

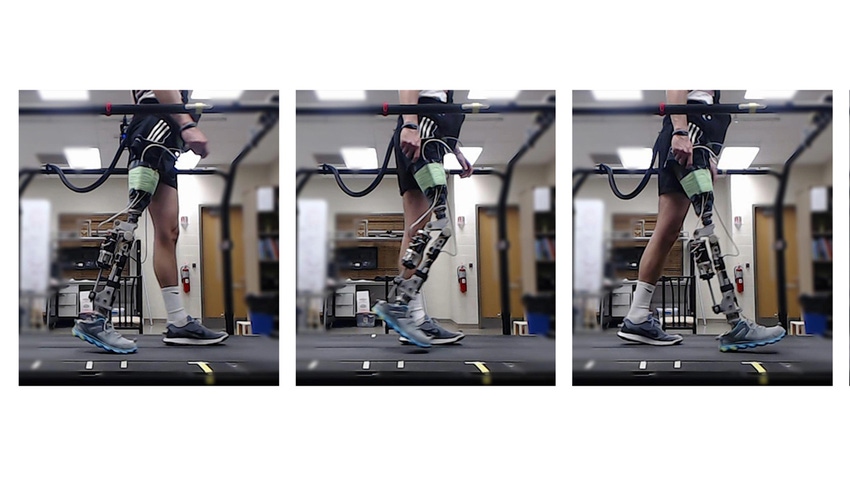

The method works by giving a patient a powered prosthetic knee with randomly set performance parameters; data on the device and the patient’s gait are collected via sensors in the device. A computer model adapts parameters on the device and compares the patient’s gait to the profile of a normal walking gait in real-time. The model can tell which parameter settings improve performance and which settings impair performance. Using reinforcement learning, the computational model can quickly identify the set of parameters that allows the patient to walk normally. In the lab, the model successfully reached target kinematics in about 300 gait cycles or 10 minutes. Huang said she hopes that work can contribute significantly to a stable model for prosthetics tuning procedures, and even for devices such as deep brain stimulation technologies and exosuits.

"Most devices, especially rehabilitation assistive devices, need tuning or adjustment and personalizing for every individual," Huang said. "For any assistive device that needs to be personalized, the framework of the algorithm could be used for all of them. The details need to be modified for their patient population."

Video: Complete Control Room for upper limb amputees calibration explainer, video courtesy Coapt LLC

About the Author(s)

You May Also Like

.png?width=300&auto=webp&quality=80&disable=upscale)