Using the Power of AI for Early Critical Disease Detection

AMD's Subh Bhattacharya discusses how AI's potential for diagnostic applications is limitless.

February 10, 2023

Artificial intelligence (AI) is becoming a transformational force in healthcare, delivering significant benefits for both patients and healthcare service providers. From critical illness, early detection, and diagnosis to surgical tool guidance and drug discovery, the possibilities for the use of AI in healthcare are simply endless.

One of the biggest benefits to humanity that AI can provide is the ability to provide early diagnosis of critical illnesses. Skin cancer, particularly the most aggressive type, melanoma – can in most cases be cured when detected early. The five-year relative survival rate of melanoma is nearly 99% at detection when the spread is localized but drops to only 22.5% when it has metastasized to distant organs.

Traditional methods of detection today include physical examination, blood tests, diagnostic imaging, and more invasive methods like biopsy. In the future, using video to capture a visibly affected area and running a trained AI deep learning algorithm on a small client device at the edge, can potentially identify this critical illness earlier in a more cost-effective, less-invasive manner. Critical illness detection using this method can be verified later by more traditional and invasive methods.

What is a deep-learning neural network and how is it developed and deployed?

A medical, AI-based deep learning method to detect critical illnesses like melanoma can be developed in two stages. The first stage is to create and train a model using available medical images previously taken using dermatoscopic (skin surface microscopic) cameras. The second stage is to deploy that trained model on a small computing device with a camera and perform AI inference on the trained model to produce a result on a patient demonstrating a visible skin abnormality.

Creating and training the deep learning model

The creation and training of the neural network model require the availability of large, clean, and labeled datasets containing images of cutaneous (skin) lesions containing, for example, four different types of image sets:

Images that were deemed ‘normal blisters’

Images deemed as ‘nodules or cysts’ growing under the skin

Images detected to be squamous cell carcinoma (a less lethal form of skin cancer)

Images detected for melanoma (the most aggressive and potentially fatal type of skin cancer cited in the previous paragraph)

Datasets typically need to be as large as possible (with hundreds of thousands of images) for the inferencing accuracy to be high. Fortunately, there are cleaned, pre-processed medical imaging datasets that can be obtained which have been released for academic research.

When pre-processing the images within the dataset, one must also ensure the proper balance in the number between the different classes of images to avoid a class imbalance and achieve the most accurate result. This training requires vast computing resources and is typically done using graphics processing units.

Now that the deep learning model is trained and ready, an efficient way to deploy it is on an adaptive hardware platform containing deep learning processing units (DPUs). These are programmable execution units running on embedded devices such as FPGA (Field Programmable Gate Arrays)-based Adaptive SoCs (System-on-Chip) that combine FPGA and ASIC-like logic with embedded CPU cores. These embedded platforms are ideal for getting inferencing results of a deep learning model on an edge-client end device due to their available high performance in a cost-effective small footprint.

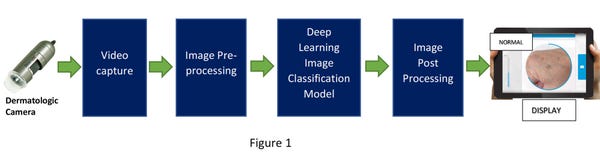

These edge devices require a dermatologic imaging camera connected to it to focus and capture a continuous video of the affected skin area and feed the captured video into the pre-processing engine using an Image Signal Processor (ISP). The pre-processed image is then fed into the deployed deep learning model running on the DPU which classifies the image into one of the four buckets defined before. The process flow is shown in figure 1 below.

With an adaptive embedded FPGA-based SoC platform, image classification can be achieved with very low latency and high accuracy. It’s important to remember that extremely high accuracy can be attained by a very large, cleaned, and balanced dataset during the training of the deep-learning model. Also, as mentioned earlier, more classification buckets can be added based on the availability of appropriate medical images.

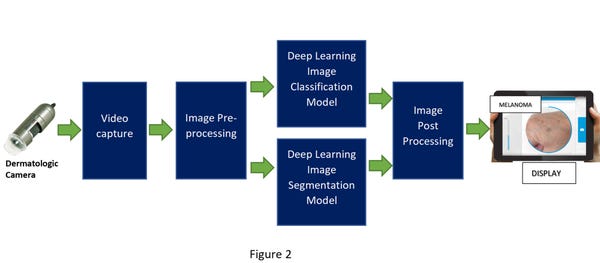

Important identifying features of melanoma are irregularity on the skin lesion border, size and growth rate of the lesion, and certain changes in shape and color. Using previously available clinical research data, another AI-based deep learning model can be created, trained, and deployed to do image segmentation analysis to provide additional proof points for a more accurate diagnosis.

In that scenario, after the video capture, the pre-processed image would be fed into two deep learning models – one for image classification and one for image segmentation. The post-processing of that data will cross-check and combine the inferencing results from the two models into one outcome (shown in figure 2 below). This has the potential to improve the chance of a better diagnosis.

The potential is limitless

Imagine a world in which we can diagnose cancer, blindness, or heart disease early enough to save lives. Advancements in AI, ML, and computing are bringing this future closer to reality.

These are just some of the early areas where AI algorithms are being applied to infer and detect critical illnesses earlier to potentially save lives. As more models using AI algorithms and medical imaging data are developed, more of these can be programmed into these small, cost-effective, low-power, programmable, adaptive embedded SoC devices with advanced software tools to diagnose critical illnesses early.

With the development of pervasive AI and medical imaging technology, and with continued improvement in adaptive embedded devices, the possibilities to leverage the power of AI in healthcare are limitless.

About the Author(s)

You May Also Like