From Our Partners

Protein Adhesion and Metal Interaction in Medical Diagnostic Equipment

Abbott Laboratories and SilcoTek share research on coatings to prevent unwanted protein adsorption on 316L stainless steel.

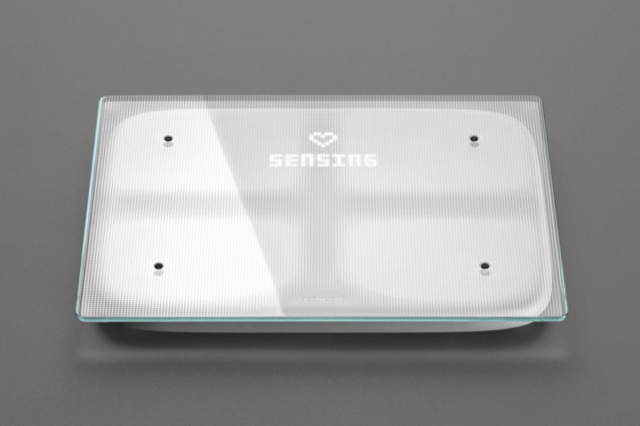

New technologies like wireless patch electrocardiograms and cellular-connected scales aim to change remote heart failure care, potentially leading to earlier detection and more efficient management.

A medtech analyst looks at how the Kalamazoo, MI-based company could potentially enter the surgical robotics market.

This week in Pedersen’s POV, our senior editor advocates for changes to the European Union’s Medical Device Regulation.

Abbott Laboratories and SilcoTek share research on coatings to prevent unwanted protein adsorption on 316L stainless steel.

Bethany Corbin details the importance of understanding these issues, citing femtech examples like reproductive health privacy post-Dobbs v. Jackson Women’s Health Organization and potential alienation of intended users.

Recent

Connect with qualified suppliers, service providers, and consultants that meet global standards for cGMP and quality.

See the latest components, materials, tubing, and more.

Browse new equipment used in molding, packaging, surface treatment, and other applications.

Find contract manufacturers, consultants, and other outsourcing partners.