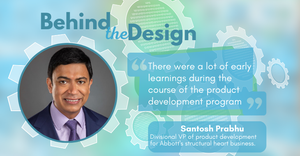

Questions and Answers

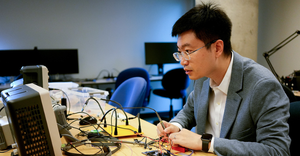

R&D

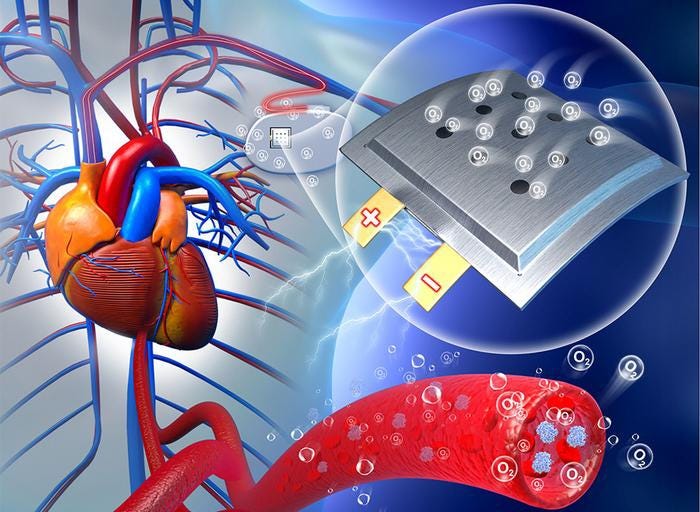

Bridging the Gap Between Research & Medical Device DevelopmentBridging the Gap Between Research & Medical Device Development

In this Q&A, MD+DI investigates the connection between how better collaboration among academic institutions and industry could improve the production of medical devices.

Sign up for the QMED & MD+DI Daily newsletter.

.png?width=700&auto=webp&quality=80&disable=upscale)