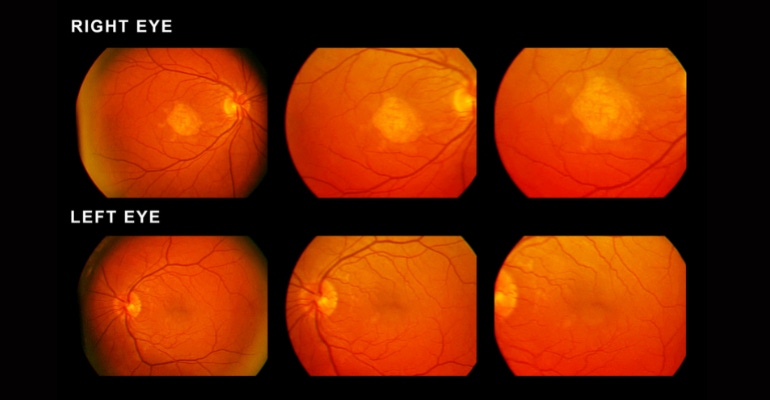

Researchers in Taiwan demonstrate how machine learning can assist in the detection of age-related macular degeneration from fundus photos.

January 12, 2023

A research team from National Cheng Kung University (NCKU), led by Chih-Chung Hsu, an assistant professor at the Institute of Data Science, NCKU, has demonstrated that machine learning can assist in the detection of age-related macular degeneration from fundus photos.

The research was part of the ADAM challenge (Automatic Detection challenge on Age-related Macular degeneration), which was a satellite event of the International Symposium on Biomedical Imaging (ISBI) 2020 conference. The ADAM challenge drew participation from 11 teams from all over the world. The details of the challenge and its results were recently published in the journal IEEE Transactions on Medical Imaging.

Age-related macular degeneration (AMD) is a leading cause of vision loss. If left untreated, AMD can lead to permanent vision loss and irreversible damage to the retina. Early detection is essential for timely treatement. Fundus photography is a powerful tool that can be used to detect the disease, but it is time-consuming and requires the expertise of a medical professional. This is where the ADAM challenge comes in.

The challenge was broken down into four tasks addressing the critical aspects of detecting and characterizing age-related macular degeneration — AMD classification, optic disc detection and segmentation, fovea localization, and lesion detection and segmentation. A dataset of 1200 fundus images was made available for the challenge. The team from NCKU used four different machine learning architectures to address each task of the ADAM challenge.

For the first task, the team used an EfficientNet architecture for the binary classification of AMD. Then, for the optic disc detection and segmentation task, they used the EfficientNet architecture for classification combined with U-Net for segmentation with a weight cross-entropy loss function. For the fovea localization task, the team used a combination of two U-Net architectures, a Mask-RCNN and ResNet. And for the last task of lesion detection and segmentation, they used a DeepLab-v3 with Resnet.

Out of the four architectures used, the one used for fovea localization particularly stood out. The team used a multiple-blocks regression strategy to divide the fundus image into multiple blocks, after which each block was one-hot-encoded.

"Early detection of AMD is essential for its treatment. We wanted to achieve a highly accurate symptom localization for fundus image, helping the doctors to have external references for their diagnosis," Hsu said about the machine learning architecture.

The model proposed by the NCKU team was comparable in performance to other state-of-the-art models while being extremely lightweight. Additionally, the ensemble strategy employed improved the performance of the model and the use of prior clinical knowledge helped to achieve better results.

"Given the fundus image, it is possible to diagnose AMD in a real-time sense, which might reduce the effort of doctors. Further, the proposed model can be integrated into hardware to be one of the standard pieces of equipment in any clinic. That would provide us with an AI-aided diagnosis, which could be very helpful for junior doctors or even interns," Hsu said.

The team has made available the video below to describe the study.

About the Author(s)

You May Also Like